Why an “AI‑on” typing test belongs in 2026

Developers increasingly keep AI code assistants and editor autocomplete turned on—but their confidence in those tools has cooled. Stack Overflow’s 2025 survey reports that 84% of developers use or plan to use AI tools, yet more respondents actively distrust AI accuracy (46%) than trust it (33%); positive favorability also fell to 60% year‑over‑year. That’s precisely why a code‑aware typing test that measures speed with AI and autocomplete enabled is more truthful than sterile, text‑only WPM. It reflects how we actually work now. (survey.stackoverflow.co)

JetBrains’ 2025 ecosystem study echoes the same reality: AI usage is mainstream (85% regularly use AI for coding; 62% rely on at least one coding assistant/agent/editor), so benchmarks need to account for these tools rather than pretend they’re off. (blog.jetbrains.com)

What plain WPM misses for programmers

Conventional typing tests optimize for flowing natural language. Coding is different:

- It’s symbol‑heavy (brackets, quotes, operators) and IDEs often auto‑insert pairs, which changes your keystroke profile. (code.visualstudio.com)

- Developers spend substantial time navigating—jumping files, moving the cursor, selecting blocks—rather than continuous text entry. A classic TSE study found developers spent about 35% of their time on the mechanics of navigation during maintenance tasks. Measuring only characters per minute hides this reality. (ieeexplore.ieee.org)

- Acceptance and editing of AI/autocomplete suggestions are now core to “how fast you code.” GitHub even surfaces acceptance rate and related usage metrics for Copilot because they correlate with trust and usefulness. (docs.github.com)

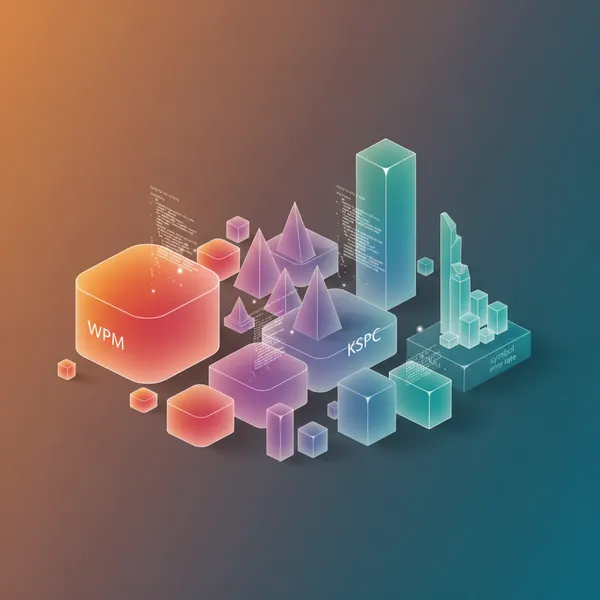

Meanwhile, HCI research shows that keystrokes‑per‑character (KSPC) captures effort beyond raw speed. For typing tests that aim to reflect developer performance, KSPC adapted for code can reveal whether speed comes from smart completions or from heavy backspacing and corrections. (yorku.ca)

Design a realistic “AI‑on” programmer typing test

Here’s a practical blueprint your typing‑test platform can implement.

1) Instrument completions and edits

Log when users accept inline completions (typically Tab/Enter) and compute:

- Autocomplete acceptance rate: accepted suggestions / shown suggestions. (This mirrors enterprise Copilot dashboards.) (docs.github.com)

- Edits per accepted suggestion: how much a user trims or rewrites immediately after accepting.

- Accepted‑and‑retained characters: what portion of a suggestion survives subsequent edits (GitHub treats retention as a quality signal, not just acceptance). (github.blog)

- Time‑to‑first‑edit after acceptance: quick edits can indicate low suggestion fit.

Tip: distinguish inline completions from chat insertions, and count partial line acceptances. GitHub’s guidance notes telemetry nuances; your test should clarify what “counts” to participants. (docs.github.com)

2) Add a “KSPC for code” panel

Report KSPC separately for:

- KSPC(raw): all keystrokes (typing, navigation keys, backspaces) divided by characters in the final submission.

- KSPC(net‑with‑AI): give credit for accepted completions by counting a single accept action as one keystroke, not the full suggestion length. This highlights keystroke savings from AI and prediction—exactly what KSPC was designed to characterize. (yorku.ca)

Also include classic accuracy metrics inspired by text‑entry research (e.g., corrected vs. uncorrected errors) to balance “how fast” with “how careful.” (yorku.ca)

3) Measure symbol handling and auto‑pairs

Coding accuracy is often decided by symbols. Track a dedicated Symbol Error Rate that flags:

- Mismatched or missing (), [], {}, quotes, backticks

- Stray delimiters introduced by over‑eager auto‑pairing

- Language‑specific closers (e.g., HTML/CSS closing tags)

Most modern editors auto‑close pairs; document the test’s default behavior and attribute whether the user or the editor produced the closing token. Provide toggles for auto‑close on/off so participants can mirror their real setup. (code.visualstudio.com)

4) Capture navigation and cursor time

Separate “entry time” from “navigation time” (cursor movement, selections, jumps, find‑symbol, go‑to‑file). Report a Navigation Ratio = navigation time / total task time. Given evidence that navigation consumes a sizable share of developer effort, a lower Navigation Ratio at equal accuracy is a strong productivity signal. (ieeexplore.ieee.org)

5) Calibrate difficulty with language‑specific corpora

Not all code is created equal. Build task sets from credible, language‑diverse corpora so your tests feel authentic:

- The Stack (BigCode) offers permissively licensed code across 358 languages; you can target symbol‑dense snippets in C/C++/Rust, templating in TypeScript/React, or regex‑heavy Python utilities. (bigcode-project.org)

- Complement with curated functions from CodeSearchNet (Python, Java, JS, Go, PHP, Ruby) to design short, realistic edits and completions. (github.com)

Scale task difficulty by:

- Syntax density (operators per line, nesting depth, literals with quotes/brackets)

- Autocomplete ambiguity (number of plausible continuations)

- Required navigation (cross‑file jumps, symbol lookups)

6) Report productivity metrics alongside WPM

Present a compact scoreboard per language:

- WPM (or characters per minute)

- Autocomplete acceptance rate + accepted‑and‑retained characters

- KSPC(raw) and KSPC(net‑with‑AI)

- Symbol Error Rate and total corrections

- Navigation Ratio and average cursor travel events per minute

This richer view rewards real‑world fluency: the developer who smart‑accepts a long completion and needs few post‑edits should rank better than someone who free‑types quickly but fixes many bracket/quote errors.

Practical tips to implement it right

- Standardize editor behaviors. Ship the test in a browser editor with transparent defaults (e.g., auto‑close brackets ON, auto‑close quotes ON) and let users toggle them to match their habits. Publish the exact language configuration (pairs, auto‑close rules). (code.visualstudio.com)

- Make AI usage explicit. Offer “AI OFF” and “AI ON” modes. In AI ON, log shown suggestions, accept events, and retention. Explain clearly what the metrics mean so participants can compare their baselines.

- Use language‑aware linters to score symbol errors fast (missing closers, unterminated strings) and attribute them correctly to user vs. auto‑pair behavior. (jetbrains.com)

- Protect privacy. Never upload the participant’s proprietary code; use public corpora and disclose what telemetry you store (keys pressed, timestamps, acceptance events—not full keystroke logs if you can avoid it). (bigcode-project.org)

- Don’t over‑optimize a single number. Industry guidance (and GitHub’s own shift beyond pure acceptance rate) shows composite signals better reflect true value. Your leaderboard should be multi‑metric by design. (github.blog)

The bottom line

Developers are keeping AI and autocomplete on—while also double‑checking their outputs. That makes “AI‑on” typing tests the most honest way to benchmark modern coding speed. If your test tracks completions accepted, edits that follow, symbol accuracy, auto‑pair effects, and navigation time—then calibrates difficulty on real code—you’ll deliver a score that actually means something in 2026. (survey.stackoverflow.co)